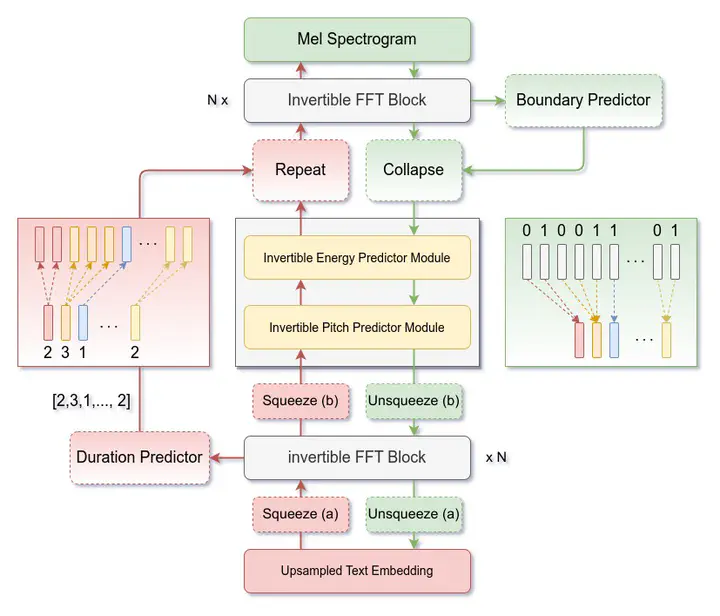

Archtechture of the proposed Duplex Speech Chain model

Archtechture of the proposed Duplex Speech Chain modelAbstract

The main objective of this paper is to explore the utilization of reversible neural network layers for constructing a duplex speech chain model, enabling effective utilization of bidirectional supervision signals from parallel datasets. Current methods employing bidirectional supervision signals are primarily categorized into two groups: general multi-task learning and cycle consistency. While both categories utilize bidirectional supervision signals, these methods possess their own limitations. To address these challenges and create a duplex model for bidirectional speech tasks encompassing speech synthesis and speech recognition, we propose reversible modules and operations that can handle text and speech length discrepancies. The proposed model represents the first duplex sequence-to-sequence model capable of addressing both speech synthesis and speech recognition challenges. Moreover, this research introduces the application of reversible neural networks to speech-related tasks. We also conduct an analysis of how the utilization of bidirectional supervision signals affects the performance of the duplex model.